In a previous article we discussed ethics guidelines and assessed their usability in the public sector. To find out how they work in practical situations, we had a discussion with data scientist Maarten Sukel. He is AI developer at the municipality of Amsterdam and PhD researcher and develops algorithms for city services.

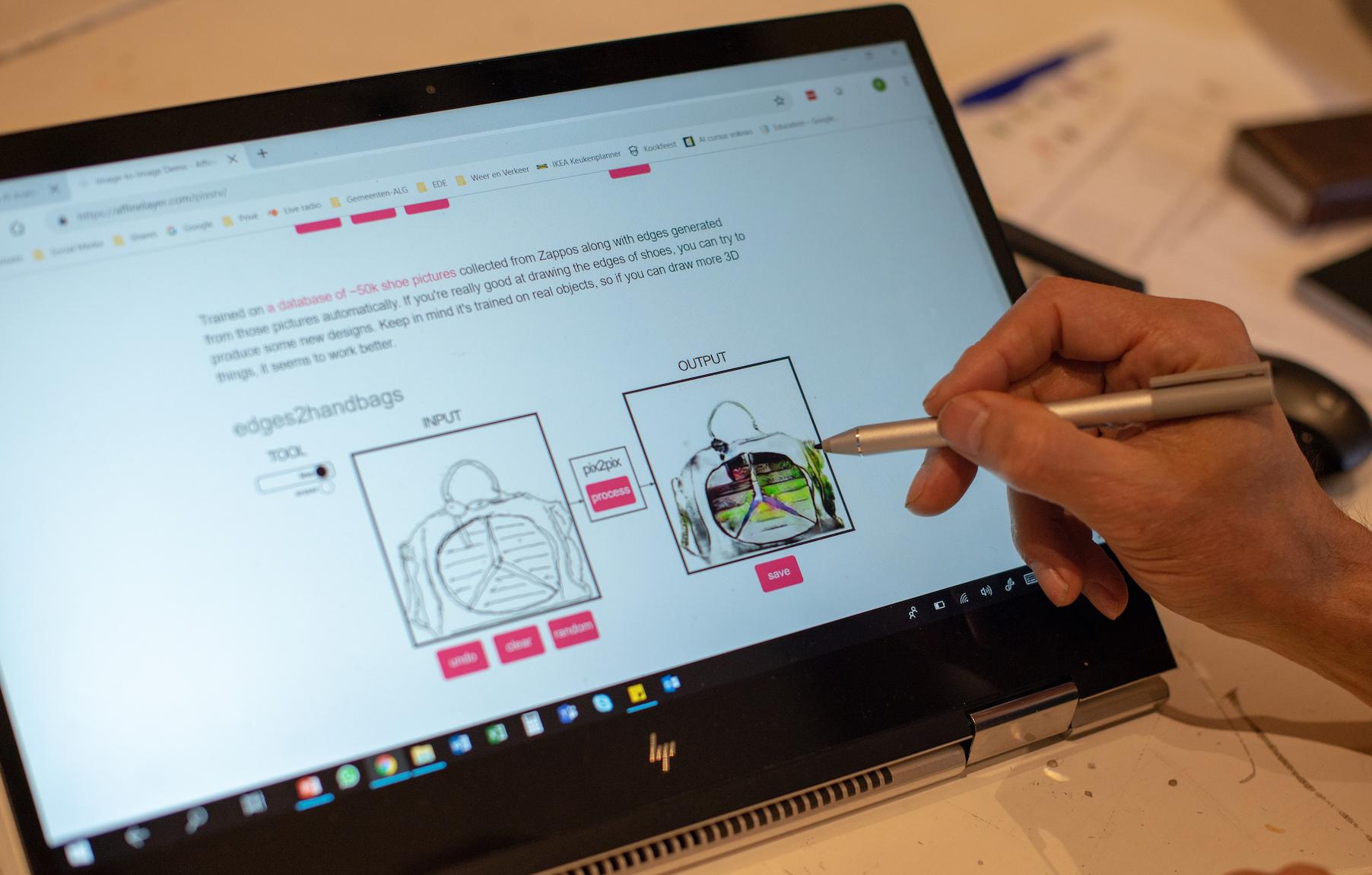

Maarten developed a citizen service request classification algorithm that is implemented at the municipality of Amsterdam. Citizen service requests are assigned to different departments through the classification of texts. He also works on object detection models, an application that scans for garbage in Amsterdam, in order to improve the collection services of the city.

In our conversation about how ethical guidelines function in practice, we focused primarily on the ethics principles outlined by the European Union’s High Level Expert Group on AI. Are guidelines like these useful for developers to use and implement? Here is what we learnt.

The format of ethics principles

In general, ethics principles try to ensure the development of a responsible environment where developers and users benefit from technology. Is the listing of principles a helpful format for developers to implement during the creation of an AI system? According to Maarten and other critics, not necessarily. There is a risk that the guidelines would function as checklists, in which boxes can simply be ticked, even if not all requirements are met.

“I agree with most of the points they say, but when I see it in practice, these sorts of assessments create a lot of paperwork. I don’t have the idea that it is actually checked before something is developed. A lot of discoveries are made because the right data was there, somebody went through it and created something, and later the ethical part is being covered,” says Maarten.

Another problem with the principled format is that the diversity of fields, applications and interests requires higher levels of abstraction, with more general terms like fairness, societal and environmental well-being and diversity.

Developers of algorithms that build robot arms or self-driving cars, that develop more efficient systems to collect garbage in the city, or create Facebook’s ranking algorithms do not have many interests in common, because they are guided by different values and concerns. As a result, principles only function as general suggestions, and they have to be translated to each individual case.

Next steps

There is a lot of work to do to create algorithms that are fair, transparent, secure and socially accountable. According to Maarten, we should train software developers and data scientists in the ethics of AI, because they are the ones who will create these applications.

A critic of the principled format, philosopher Brent Mittelstadt suggests that it might be necessary to formally establish AI development as a profession with equivalent standing to other high-risk professions.

Another important task for developers, as Maarten suggests, is to continue communication and to be open about what these technologies do, even if it is not possible to share everything due to privacy regulations.

An elaborate assessment of some of the individual principles based on the discussion with Maarten Sukel is available here.