Every day we are put in boxes based on our data, without us having to say anything about it. If you watch a movie on Netflix, the algorithm will then recommend more of the same films and if you live in an expensive neighbourhood, the price for a flight online may suddenly be higher. Everyone is continuously categorised by algorithms. These are examples that do not hurt so much, but what happens if you do not get a job or have to go to jail for longer based on your data?

In her book Weapons of Math Destruction, Cathy O'neill describes how classification systems can have a negative impact on society. A dangerous part of these algorithms are using data as a proxy, such as using address data to estimate how much chance you have of being criminal. The algorithms only look at people like you and not at who you are.

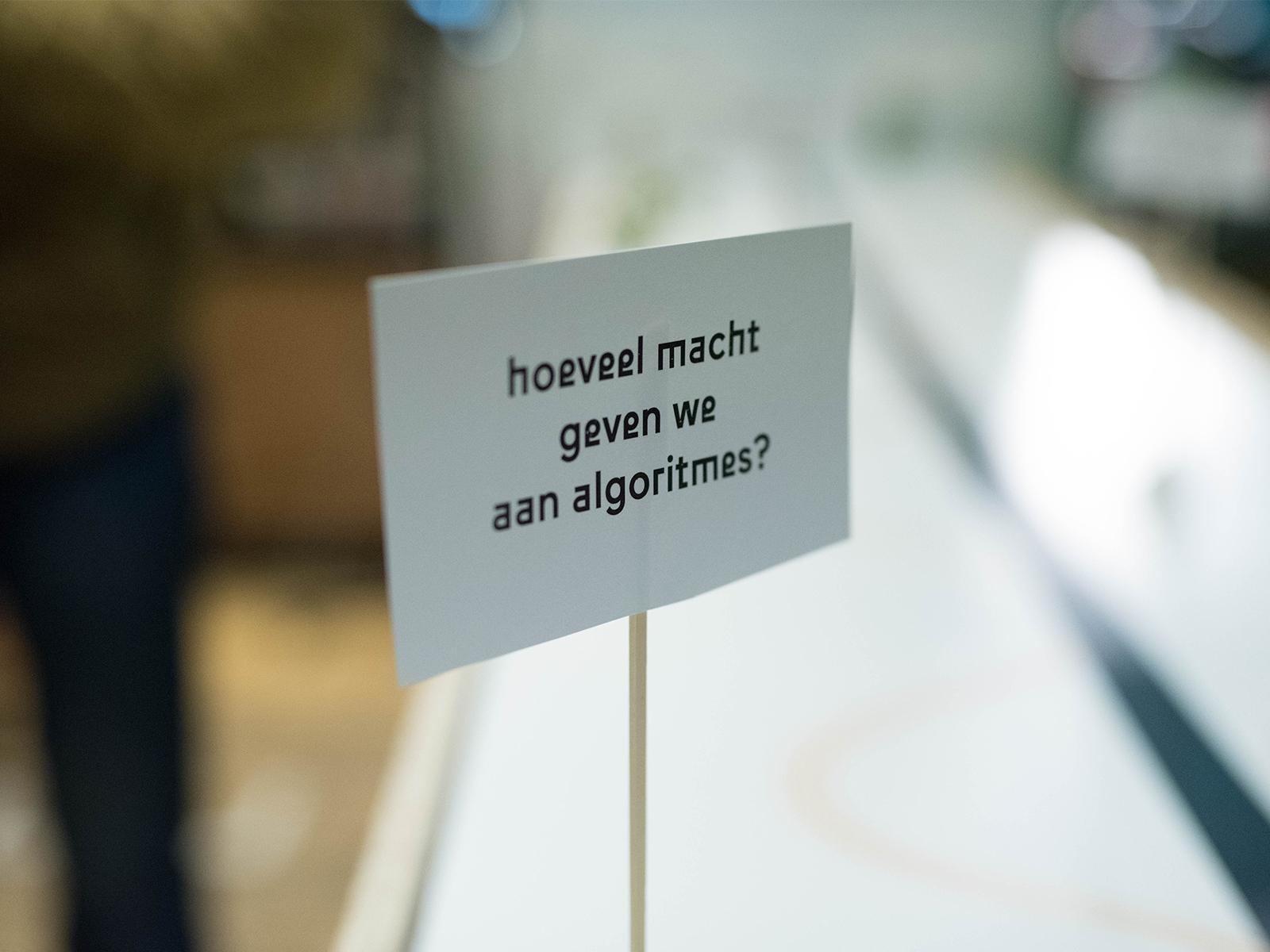

Over the past few months, as part of my internship at Waag, I have researched how algorithms can turn people into a set of data points on which they can be assessed. In this study, I discovered that algorithms are often mainly opinions of those who have made the algorithm. The choice of which data is used and how it is weighed has a lot of effect on the result.

Under the guidance of Tom Demeyer and Stefano Boccini I developed a framework for recognising types of people on the basis of their photos. To conclude this research, I built an installation of several Tinder chatbots that look at photos and assess these on various factors. The bots then make a choice whether the person behind the profile suits them.

To train the algorithm I searched for clichés that often occur on Tinder. Men take photos with their cars or abdominal muscles and among all users holiday and festival photos are popular. That is why I trained an algorithm to recognise abdominals, cars and beach photos and six Tinderbots all gave their own preferences what they were looking for.

The first reactions to the bots were divided. Many Tinder users felt fooled or did not agree with the judgment of the algorithm. Others were surprised and found it an interesting initiative. The project was concluded at Sociëteit Sexyland, where results were discussed with various speakers.