58% of the Dutch population consider the topic ‘Fake news, fake pictures and polarisation’ crucial when it comes to the development of Artificial Intelligence (AI) and research on it.

This is according to a large-scale survey conducted by Waag Futurelab on behalf of the Dutch AI Coalition (NL AIC) in collaboration with Ipsos I&O. The reason for this research, was an earlier survey, which took place between June 2023 and March 2024, where we defined 12 key themes with a citizen panel of 100 people and a survey. For this research, we presented these themes to a representative group of 1327 respondents.

Fake news, power relations and privacy

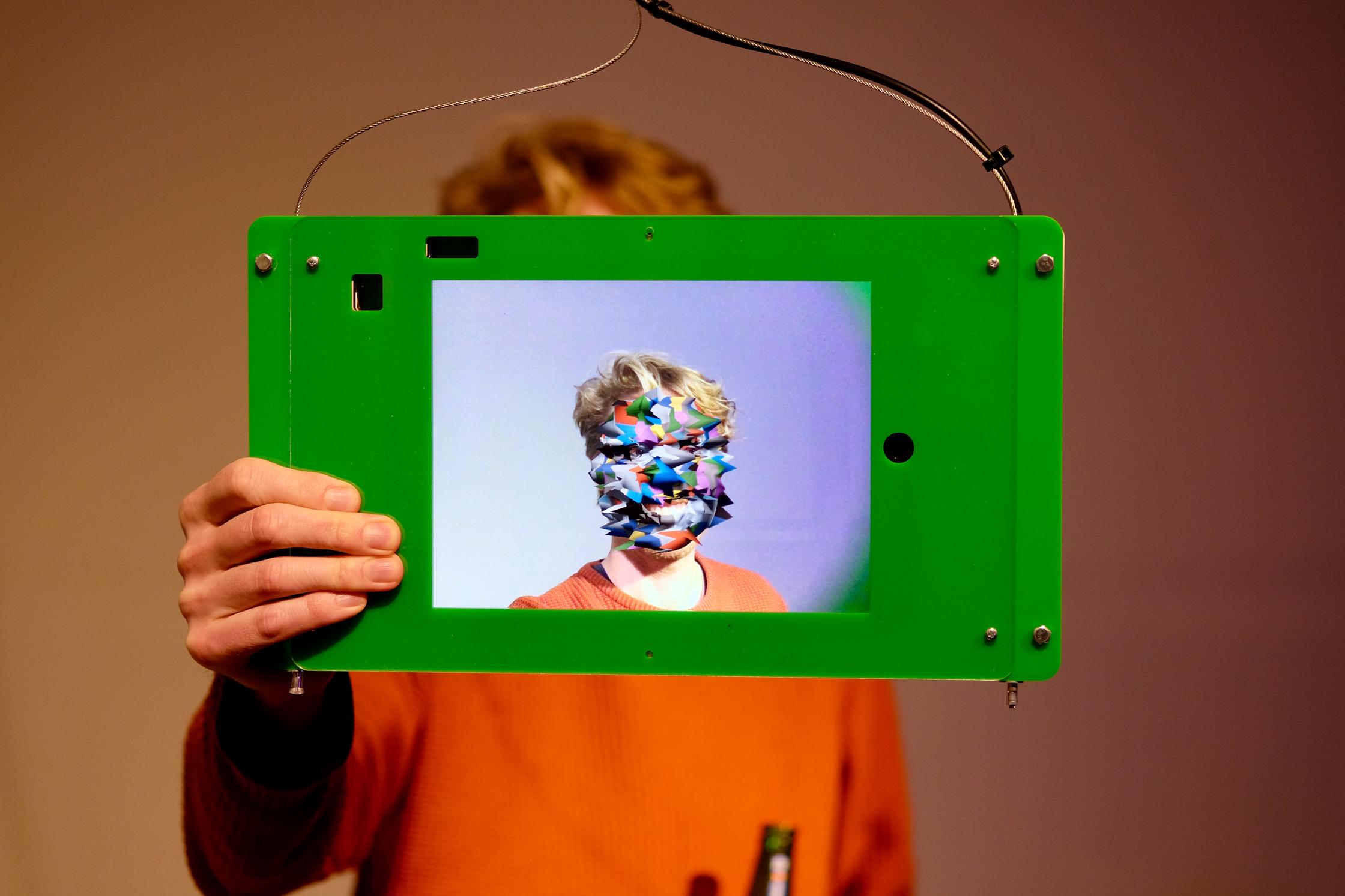

Key results within the survey revealed that fake news and polarisation were mentioned most often, with concerns about the spread of false information and deepfake technology being expressed. 68% of respondents see this as a crucial issue in future research on AI.

The Dutch are also concerned about power relations and the control that large tech companies and governments may gain through the use of AI technology. 37% of respondents consider this an important theme for further research.

In addition, technology monitoring people and questions around freedom, responsibility and accountability were also seen as important themes. One in three respondents named these as important topics.

The survey also showed that the vast majority of the Dutch population has a reasonable to good perception of AI and makes meaningful associations with it. Only a small group indicated having no idea what AI is.

How to move forward?

The findings of this study call for a response from everyone who has a role in the development of AI in the Netherlands. Currently, society is rarely systematically involved in the creation of research agendas. Following the findings of the study, we recommend the following next steps:

- Take concrete action to combat fake news and fake images; by investing in strategic projects as well as open calls in which parties from society itself can also participate.

- Make society the client of projects and calls that serve a social purpose; not only the government, science or companies should determine which path we take within the development of AI.

- Establish frameworks together with society to determine what AI should comply with; work out in them what comprehensibility and transparency of AI means as a minimum and what it means to safeguard public values in design such as freedom, democracy, inclusiveness, privacy and security.

- Creating trust in AI, by developing AI that deserves trust, by;

a. Giving people a chance (through co-creation) to influence the development of AI and using their values as the basis for design.

b. Increasing knowledge and experience of AI among society through education and experimentation for all.

c. Realise an organisation or hotline that monitors AI and to which people can go

Research report and visualisation

Through this link, you can read the full research report with our recommendations (NL). We have also incorporated the results of the research into the visualisation below (NL).